When developers leave or switch roles, they often take critical knowledge with them – like system architecture, business logic, and troubleshooting strategies. Without proper knowledge transfer, teams face reduced productivity, confusion, and even higher turnover rates. Here’s how to prevent that:

Key Strategies for Knowledge Transfer:

- Structured Transition Plans: Identify critical knowledge, assign roles, and use checklists to ensure smooth handoffs.

- Comprehensive Documentation: Maintain updated guides, runbooks, and a centralized knowledge base for easy access.

- Collaborative Practices: Use pair programming, mob programming, and cross-team reviews to share insights in real time.

- Self-Service Portals: Create user-friendly platforms with searchable, mobile-friendly documentation.

- Measure and Improve: Track usage, test retention, and adapt based on feedback to refine your approach.

Why It Matters:

- Companies with strong knowledge-sharing systems see 25% higher productivity and 35% lower turnover.

- New hires are 50% more productive when onboarding includes proper knowledge transfer.

By following these steps, you can preserve expertise, ensure business continuity, and foster collaboration across your development teams.

Best Practices for Knowledge Transfer and Software Developer Project Handoff

Building a Knowledge Transfer Framework

A well-thought-out framework transforms messy, ad-hoc knowledge sharing into a repeatable process that safeguards key expertise and eliminates the need to reinvent the wheel. By addressing knowledge silos and capturing unspoken insights, such a framework ensures smooth transitions and supports continuity. At OpenArc, we focus on creating frameworks for knowledge transfer that enhance transitions and sustain progress in custom software development projects.

The best frameworks combine established methods with adaptable strategies. They acknowledge the importance of both explicit documentation and the nuanced, experience-driven knowledge held by seasoned developers. A strong framework lays the groundwork for effective documentation and collaboration, which we’ll explore further in the following sections.

Creating Step-by-Step Transition Plans

Transition plans act as roadmaps for transferring ownership of systems, codebases, and processes from one person to another. These plans are essential for preventing knowledge loss when team members leave or shift roles.

A solid transition plan begins with a knowledge audit. This involves identifying what needs to be transferred, who holds the knowledge, and where documentation gaps exist. The audit should cover not just technical details but also business context, stakeholder relationships, and the historical decisions that shaped the system’s current state.

"Effective knowledge transfer is not just about passing on a list of duties or skills… It’s about transferring insights, approaches, resources, and understanding of nuanced project dynamics." – Sarah Mulcahy, Enboarder

Transition plans should outline clear roles, responsibilities, and timelines. For example:

- Tech leads handle architectural decisions and system design documentation.

- Senior developers focus on coding standards and implementation guides.

- QA leads manage testing protocols.

- Product owners oversee requirements and feature specifications.

To prepare for unexpected changes, include contingency measures like backup documentation and cross-training other team members to step in if needed.

Using Handover Checklists

Handover checklists are invaluable for ensuring nothing gets overlooked during transitions. Unlike generic to-do lists, these checklists are customized to reflect the unique responsibilities and knowledge areas of each role.

A comprehensive handover checklist should include:

- System Access: Credentials, permission levels, security protocols, and backup methods.

- Project Status: Progress updates, upcoming deadlines, and potential challenges.

- Stakeholder Relationships: Key contacts, their roles, communication preferences, and any active concerns.

- Documentation: Code guides, troubleshooting steps, and process overviews.

Here’s an example of how a handover checklist might look:

| Handover Component | Required Elements | Owner | Review Cycle |

|---|---|---|---|

| System Access | Credentials, permissions, security info | IT Admin | Per transition |

| Project Status | Progress updates, deadlines, roadblocks | Project Manager | Weekly |

| Stakeholder Info | Contacts, roles, communication preferences | Team Lead | Monthly |

| Documentation | Code guides, processes, troubleshooting | Senior Developer | Per release |

To keep the process relevant, regularly update your checklist. What worked for last year’s tools might not suit current systems. Businesses with formal handover processes report smoother transitions and fewer disruptions, while those lacking such processes often face confusion, inefficiency, and reduced productivity.

Writing Runbooks for Critical Systems

Runbooks are essential for managing system emergencies. When something goes wrong – especially during off-hours – a detailed runbook can mean the difference between a quick fix and prolonged downtime.

"Knowledge transfer is the methodical replication of the expertise, wisdom, and tacit knowledge of critical professionals into the heads and hands of their coworkers." – Steve Trautman, The Steve Trautman Co.

A strong runbook provides more than just troubleshooting steps. It includes an overview of the system’s architecture, common failure patterns, escalation procedures, and recovery strategies. Importantly, it explains the reasoning behind each action so responders understand not just what to do, but why.

Here’s how to structure an effective runbook:

- Purpose Statement: Clearly define when to use the runbook and what outcomes to expect.

- Prerequisites: List tools, access, or prior knowledge required.

- Step-by-Step Instructions: Write detailed steps that even someone unfamiliar with the system can follow.

Visual aids like screenshots, diagrams, and flowcharts can make runbooks even more effective. For instance, when Caddick Construction worked with COGNICA to create operation manuals for the University of London, visual documentation cut training time by 35% and reduced support calls by 40%.

Testing and maintaining runbooks is just as important as creating them. Schedule quarterly drills where team members unfamiliar with the procedures test the instructions. This practice helps identify gaps and ensures the content stays accurate. Version control is also crucial – tag each version with the systems it covers, the latest test date, and any known limitations. For example, BASF Nijehaske used Accruent‘s solutions to manage over 20,000 documents, reducing errors by 60% through proper version control.

When emergencies arise, the time and effort invested in well-prepared runbooks pay off significantly.

Improving Documentation for Better Access and Use

Documentation only works when teams can easily find, understand, and use it. If developers waste hours searching for information or run into outdated instructions, even the best knowledge base becomes useless. To make documentation effective, it needs to be designed with real users in mind. Good documentation not only preserves knowledge but also streamlines workflows. Below are practical ways to centralize, access, and maintain documentation efficiently.

"Documentation is critical for software projects. Many projects lack documentation, hindering user adoption." – Eric Holscher, co-founder of Write the Docs

A staggering 64% of developers report frustration with poor documentation, and data silos cost employees over two hours daily. At OpenArc, we’ve seen how structured documentation systems can significantly cut onboarding time for new hires and eliminate bottlenecks caused by knowledge being locked in individuals’ minds. The secret? Treat documentation like a product that’s built to serve its users.

Creating a Single Source of Truth

A Single Source of Truth (SSOT) consolidates data into one central repository, eliminating duplicates, optimizing storage, and ensuring everyone operates from the same set of facts. For SSOT to work, leadership needs to back it, and clear rules for data quality must be in place. This setup ensures consistent access to critical information and complements structured transition plans.

Using consistent naming conventions is a simple yet powerful way to keep documents organized and easy to locate. For instance, instead of having files named "API_docs_v2", "api-documentation-final", and "API_Guide_Latest", opt for a standard like "API_Documentation_v2.1_2024-05-26." A centralized Document Management System (DMS) – like Google Drive, Microsoft SharePoint, or Dropbox – also plays a key role in maintaining order. Role-based access controls protect sensitive information by restricting it to the right people, while regular reviews and archiving outdated documents prevent clutter.

API gateways can further simplify things by acting as centralized hubs, automating and standardizing API documentation processes. These gateways provide cross-team visibility, role-based access, and support for distributed teams.

Building Self-Service Documentation Portals

Self-service portals turn documentation into an active tool for solving problems. With 81% of users preferring self-service options and 65% of support teams reporting fewer calls as a result, the benefits are clear. Building an effective portal starts with understanding your audience – conduct research and create user personas to ensure the portal addresses actual needs.

Focus on top tasks by making them easy to find on the main page. For example, one self-service portal cut parcel shipment times by 50%. Adding an AI-powered search engine that understands natural language queries can further improve the user experience. Since 88% of users expect mobile-friendly options, ensuring the portal works seamlessly on mobile devices is a must. Proactively suggesting relevant content can also guide users to the answers they need, reducing support cases.

Keeping API and Code Documentation in Sync

Keeping API and code documentation aligned is crucial for smooth knowledge sharing. Automating API documentation ensures reference materials stay accurate and up to date. Using code-based specifications like OpenAPI ties documentation directly to the code, minimizing inconsistencies. The OpenAPI Specification (OAS) covers details like parameters, headers, and error messages, making it a comprehensive resource.

Take Pipedrive as an example: in the eight months following its release in October 2023, 15 teams adopted an automated API documentation and TypeScript type generation system. Nearly 60 services now offer automated API schemas, thanks to integrating this process into their existing service templates.

Separating descriptive text from technical definitions in the OAS allows technical writers and developers to focus on clarity and accuracy without stepping on each other’s toes. Teams that adopt automated documentation testing report 40% fewer support tickets about API usage. Using semantic versioning (e.g., MAJOR.MINOR.PATCH) ensures documentation reflects breaking changes and clarifies which version is in use. Treating documentation as part of the code – by integrating it into CI/CD pipelines – keeps updates consistent and ensures documentation evolves alongside the software.

Promoting Knowledge Sharing Through Team Collaboration

Documentation lays the groundwork for transferring knowledge, but the magic truly happens when teams work together actively. When developers collaborate in real time, they break down barriers and enable both explicit and tacit knowledge to flow freely. This kind of teamwork builds on structured documentation to form a dynamic ecosystem for knowledge sharing.

For instance, teams that hold daily stand-ups report a 17% faster project completion rate, while those leveraging well-integrated communication tools see a 74% boost in productivity. Shifting from passive information exchange to active collaboration can make all the difference.

Running Cross-Team Architecture Reviews

Architecture Review Boards (ARBs) play a vital role in ensuring technology projects align with an organization’s overarching architectural framework and strategic goals. These boards typically include senior architects, stakeholders, and domain experts who evaluate, approve, or reject architectural designs to maintain consistency across areas like data, applications, and security.

"In the world of software development, the Architecture Review Board is the compass that guides teams towards architectural excellence, fostering innovation while maintaining strategic alignment."

For example, a software company might establish an ARB to ensure architectural consistency across its products and mitigate risks tied to architectural decisions. These boards are responsible for reviewing proposed changes, setting standards, and aligning designs with long-term objectives.

The review process usually takes place after design but before implementation. Teams submit proposals using standardized templates, which are then prioritized based on factors like urgency, project impact, or alignment with company strategies. ARBs often meet bi-weekly or monthly, with agendas covering proposal reviews, ongoing challenges, and decision-making through consensus or voting.

Decisions are documented using Architectural Decision Records (ADRs), which are stored in version-controlled repositories for easy access. These repositories can be simple, such as a shared document location or a wiki.

"The ARB isn’t just about decisions; it’s about fostering collaboration and wisdom-sharing among diverse expertise, creating a tapestry of informed choices."

To improve efficiency, consider rotating stakeholders regularly to share knowledge and workload. Automated architecture review tools can also help members focus on their areas of expertise. Retrospective sessions provide valuable feedback, helping refine decision-making processes as the organization evolves.

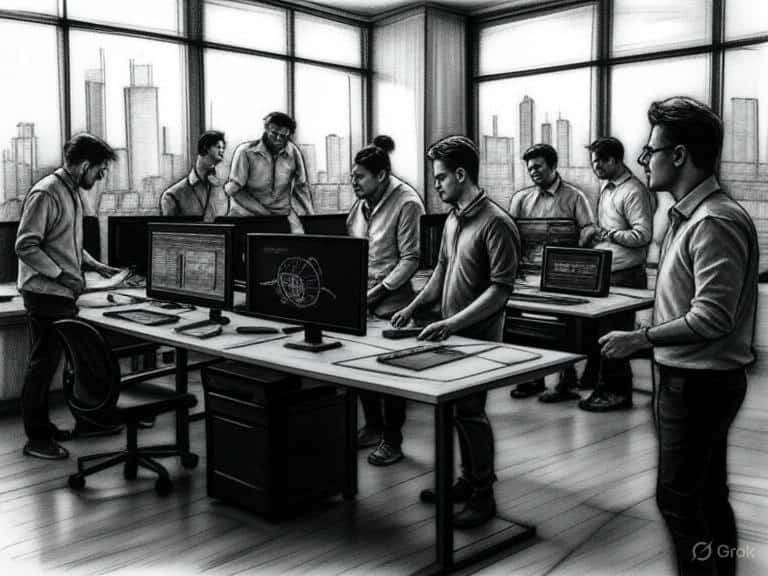

Using Collaborative Programming Sessions

Beyond formal reviews, direct programming collaboration is an excellent way to strengthen knowledge exchange. Techniques like pair programming and mob programming are especially effective for sharing tacit knowledge that’s hard to document. For instance, MYOB saw a tenfold performance boost after adopting mob programming, making their team the company’s top performer.

In pair programming, engineers alternate between "driver" and "navigator" roles, ensuring both parties learn and contribute equally. Remote teams can achieve similar results using screen sharing or shared terminals.

Mob programming takes this concept further, with three or more people tackling the same issue simultaneously. At Service NSW, mob programming helped a team of six engineers master Test-Driven Development within five weeks. Similarly, Lexicon used mob programming to complete a migration project in just three months.

Psychological safety is a key factor – team members need to feel comfortable sharing their ideas and concerns. Teams should also set clear goals for collaborative programming sessions to ensure they stay focused and productive.

"Psychological Safety is a must, and the entire team needs to understand the value of trying Mob Programming for the task at hand, with a clear goal of solving the specific issues they are facing." – Fayner Brack

Running commentary during pair programming sessions can make the reasoning behind coding decisions more transparent, enhancing the learning experience. These sessions can also serve as informal code reviews, reducing defect rates by up to 85%. Incorporating these practices into your knowledge-sharing strategy can lead to long-term success.

Managing Shared Component Libraries

Shared component libraries are invaluable for maintaining consistency and speeding up development across teams. However, their success hinges on proper management and clear documentation that outlines each component’s functionality and limitations.

Versioning is critical for managing shared libraries. Semantic versioning, for example, helps teams make informed decisions about backward compatibility. Smaller projects might rely on direct references, but larger applications benefit from using NuGet packages, which allow for gradual updates without disrupting dependent systems.

To avoid compatibility issues, encapsulate internal logic and adhere to the Single Responsibility Principle, keeping libraries small and maintainable. Avoid embedding business rules in shared libraries; instead, use Data Transfer Objects (DTOs) to maintain flexibility.

When implementing shared components, consider making them render mode agnostic, allowing the application project to set or inherit the render mode. A well-designed CI/CD pipeline can automate the publishing of new library versions, ensuring smooth updates across projects. Centralized dependency management tools further simplify version control, while robust testing ensures system stability during updates.

"Knowledge management doesn’t happen until somebody reuses something." – Stan Garfield

The human element is just as important as the technical one. Teams need to embrace a culture where reusing existing components is valued over reinventing the wheel.

At OpenArc, the most successful shared libraries are those that address recurring challenges. When components solve real problems and come with clear documentation and examples, adoption becomes a natural outcome. This unified approach to managing shared libraries strengthens your team’s ability to share and retain critical knowledge.

sbb-itb-76494ae

Measuring and Improving Knowledge Transfer Results

To make knowledge transfer truly effective, it’s important to measure its outcomes and refine the process continuously. Research shows that organizations with strong knowledge management systems experience a 25% increase in employee productivity, and 75% of businesses consider knowledge management a key part of their strategy.

While tracking input metrics is relatively straightforward, output metrics provide deeper insights into the results and overall impact. By combining these two approaches, teams can better understand both the technical and human aspects of knowledge sharing. Let’s explore how to measure and improve knowledge transfer efforts.

Tracking Team Contributions and Documentation Usage

Tools like version control systems and analytics platforms can help track how knowledge is being shared and utilized across teams. These tools offer valuable insights into contribution rates, documentation usage, and patterns of collaboration.

- Access and usage metrics: These metrics indicate how accessible and frequently used your knowledge resources are. For example, tracking page views, unique visitors, and how often specific documentation is accessed can reveal which resources are most valuable.

- Contribution and engagement metrics: These metrics focus on the human element of knowledge sharing. By monitoring the number of contributions, user feedback, and participation rates, you can see how actively team members are involved. This also helps identify "knowledge champions" – individuals who consistently contribute and mentor others.

| Metric Category | Examples | Purpose |

|---|---|---|

| Access and Usage | Page views, unique visitors, usage frequency | Measure resource utilization |

| Contribution and Engagement | Number of contributions, feedback, participation | Track team involvement |

| Quality and Accuracy | User ratings, accuracy checks, update timeliness | Maintain content relevance |

| Efficiency | Time to find info, resolution time, search speed | Improve knowledge retrieval |

Recognizing and rewarding high contributors can motivate others to participate more actively. When team members feel their efforts are appreciated, they’re more likely to stay engaged in knowledge-sharing initiatives.

Regular quality checks are also crucial. Metrics like user ratings and content accuracy ensure that knowledge remains relevant and up-to-date. This kind of monitoring prevents outdated or incorrect information from derailing team efforts.

Testing Knowledge Retention

Knowledge retention is about more than just storing information – it’s about ensuring that people can recall and apply it when needed. Testing retention can uncover gaps between what’s documented and what’s understood.

Team retrospectives are a great way to evaluate knowledge retention. These sessions allow team members to discuss which resources were helpful, identify areas where information is missing, and assess how effectively they’ve applied shared knowledge in real-world situations.

- Efficiency metrics: Metrics like the time it takes to find information, resolve issues, or use search tools effectively can reveal how well teams are internalizing knowledge. Improvements in these areas suggest that knowledge transfer efforts are paying off.

- Practical application: Instead of relying on quizzes or formal tests, observe how team members apply knowledge in real scenarios. For instance, track how quickly new hires become productive, how effectively team members troubleshoot issues, or how well they can explain complex systems to others.

Additionally, learning and development metrics – such as training completion rates and documented skill improvements – can connect knowledge-sharing efforts to individual and organizational growth. These insights help refine processes and ensure they’re meeting the team’s needs.

Making Improvements Based on Feedback

The best knowledge transfer processes are constantly evolving. Regular feedback sessions provide an opportunity for teams to highlight missing information, suggest updates, and refine how knowledge is shared.

Feedback collection works best when it’s systematic. Encourage team members to ask questions, share constructive feedback, and apply their newly gained knowledge in practical ways. This approach helps uncover strengths and weaknesses in your knowledge-sharing efforts, paving the way for targeted improvements.

- Strategic metrics: These metrics align knowledge-sharing initiatives with broader business goals. For example, when introducing a new project management process, track metrics like project timelines and budgets alongside team feedback to gauge its effectiveness.

- Innovation metrics: Knowledge sharing can drive creativity. By monitoring the number of new ideas generated or successful innovations resulting from collaboration, you can measure how well your knowledge transfer efforts support creative thinking.

Combining data with feedback creates a well-rounded approach to improvement. Reviewing analytics alongside team input allows you to identify pain points and address them effectively. This ensures that knowledge transfer stays relevant, efficient, and aligned with your team’s evolving needs.

The ultimate goal isn’t to chase perfect metrics – it’s to gain actionable insights that lead to better ways of sharing and retaining knowledge.

Making Knowledge Transfer a Long-Term Practice

Knowledge transfer isn’t a one-time task – it’s a continuous effort that requires dedication and support across the board. The cost of inefficient knowledge sharing is staggering, with companies losing significant resources because of it. As discussed earlier, having structured processes and well-organized documentation lays the foundation for a workplace culture that prioritizes sharing knowledge. The strategies outlined in this guide offer a clear path for development teams to effectively share and retain critical information.

Key Takeaways

To make knowledge transfer successful, focus on four core pillars: structured frameworks, accessible documentation, collaborative practices, and metrics-driven improvement. Together, these pillars address both the technical and human sides of knowledge sharing.

- Structured frameworks and documentation: Reliable frameworks and a single source of truth for documentation prevent information loss, especially during transitions. This streamlines workflows by reducing the time spent searching for information, so teams can focus on creating high-quality solutions.

- Collaborative practices: Activities like cross-team architecture reviews and pair programming naturally encourage knowledge exchange. For instance, a senior developer at a fintech startup shared, "We pair junior devs with seniors for at least 2 hours daily. Our onboarding time dropped from 3 months to 3 weeks, and code quality improved dramatically".

- Metrics-driven improvement: Tracking outcomes like usage patterns and retention rates ensures that knowledge-sharing efforts are delivering results. These insights help teams refine their strategies and justify investing further in knowledge transfer initiatives.

By integrating these elements, organizations can establish a lasting culture of knowledge sharing.

Building a Culture of Knowledge Sharing

While structured frameworks and documentation are critical, creating a supportive culture is just as important for sustaining knowledge transfer. This goes beyond tools and processes – it requires a mindset shift across the organization. Many employees still face challenges accessing information, underlining the need for broader cultural change.

Leadership plays a pivotal role here. When managers actively contribute to documentation and recognize team members who share their expertise, it reinforces the importance of knowledge sharing. NASA’s initiative, NASA@WORK, is a great example of this. It’s a platform that enables collaboration across departments, regardless of physical location.

Accountability is another key factor. Incorporating knowledge-sharing metrics into performance evaluations can make a big difference. For example, a SaaS company introduced "Friday Learning Sessions", where team members take turns sharing something they learned during the week. A lead developer noted, "These sessions not only spread knowledge but also built a culture where learning is celebrated". Regular, structured sharing like this helps make knowledge exchange feel natural rather than forced.

To get started, focus on addressing specific pain points. Choose tools that integrate seamlessly with existing workflows and support both real-time and asynchronous communication. Once teams see the benefits, they’ll be more open to adopting additional practices.

Finally, fostering psychological safety is essential. Team members need to feel comfortable asking questions and admitting when they don’t know something. When knowledge sharing becomes a trusted, collaborative process, it creates an environment where everyone can learn and contribute effectively.

For companies with 1,000 employees, poor knowledge sharing can lead to productivity losses of $2,700 per employee annually. Investing in knowledge transfer not only reduces these losses but also strengthens the organization as a whole.

FAQs

What are the best practices for ensuring effective knowledge transfer across development teams over time?

To ensure that knowledge is effectively shared and retained across development teams, organizations should adopt a well-structured and team-oriented approach. Begin by pinpointing the critical knowledge areas – this includes documenting workflows, essential skills, and practical insights in a format that’s easy for everyone to access and understand. Tools like training sessions, mentorship programs, and digital platforms can play a key role in capturing and distributing this information.

Equally important is cultivating a culture that values open communication and teamwork. Keep knowledge repositories up to date, actively involve team members in the sharing process, and create mentorship opportunities to ensure information stays relevant and useful. With these strategies in place, companies can minimize the risk of losing expertise during transitions and enhance overall productivity.

What challenges do teams face when creating a Single Source of Truth for documentation, and how can they address them?

Creating a Single Source of Truth (SSoT) for documentation isn’t always straightforward. Teams often face hurdles like scattered information spread across various tools, inconsistent documentation habits, and insufficient training. These challenges can result in confusion, wasted time, and reliance on outdated or incorrect data.

To overcome these issues, it’s essential to implement a centralized system where all critical information is stored in one place. Establishing clear documentation guidelines – such as standardized naming conventions and regular update procedures – helps maintain consistency and accuracy. Additionally, holding routine training sessions equips team members to use and manage the system effectively, promoting better teamwork and smoother knowledge sharing.

How do pair programming and mob programming help development teams share knowledge effectively?

Pair Programming and Mob Programming: Collaborative Coding at Its Best

Pair programming and mob programming are two collaboration techniques that can significantly boost knowledge sharing within development teams.

In pair programming, two developers work together at a single workstation. One writes the code while the other reviews it in real-time. This setup allows for immediate feedback, quicker onboarding for new team members, and a steady exchange of skills. Beyond improving the quality of the code, it also builds a culture of teamwork and ongoing learning.

Mob programming takes this collaborative spirit even further. Here, the entire team works on the same task simultaneously. With everyone contributing, this method spreads knowledge, highlights best practices, and brings together insights from all experience levels. It strengthens team cohesion, minimizes misunderstandings, and sparks creativity by bringing diverse perspectives to the table. Plus, it helps ensure that critical knowledge stays within the team, even if members move on.

Both approaches go beyond just writing code – they create an environment where teams grow stronger and smarter together.