How AI Makes Software Testing Smarter: A Practical Guide for QA Teams

AI and software testing have become inseparable for QA teams that want to stay competitive. The World Quality Report 2018-19 identifies artificial intelligence as one of the biggest trends in QA and testing for the next decade. Organizations need strategies around this technology now.

Here’s what AI does for software test automation: it generates and adapts test cases based on real-time data without human intervention. AI tools analyze your requirements, code changes, and existing test cases to automatically find potential edge cases that you might miss. This improves test coverage significantly while cutting down the manual work your team has to do.

AI executes tests automatically across different environments. It validates results and catches visual inconsistencies that could hurt user experience. AI models study historical data to predict where defects might show up, so your team can focus testing efforts on the areas most likely to have problems.

This guide walks you through implementing AI-driven testing in your QA processes. I’ll show you the specific tools available today and how to tackle common challenges when you adopt this technology. Whether you’re just getting started with AI or want to improve your existing automation framework, you’ll find actionable insights to make your testing smarter and more efficient.

Core AI Capabilities That Power Smarter Testing

“Test automation isn’t automatic; it requires a lot more thought and design than just recording a test script.” — Michael Bolton, Consulting software tester and testing teacher

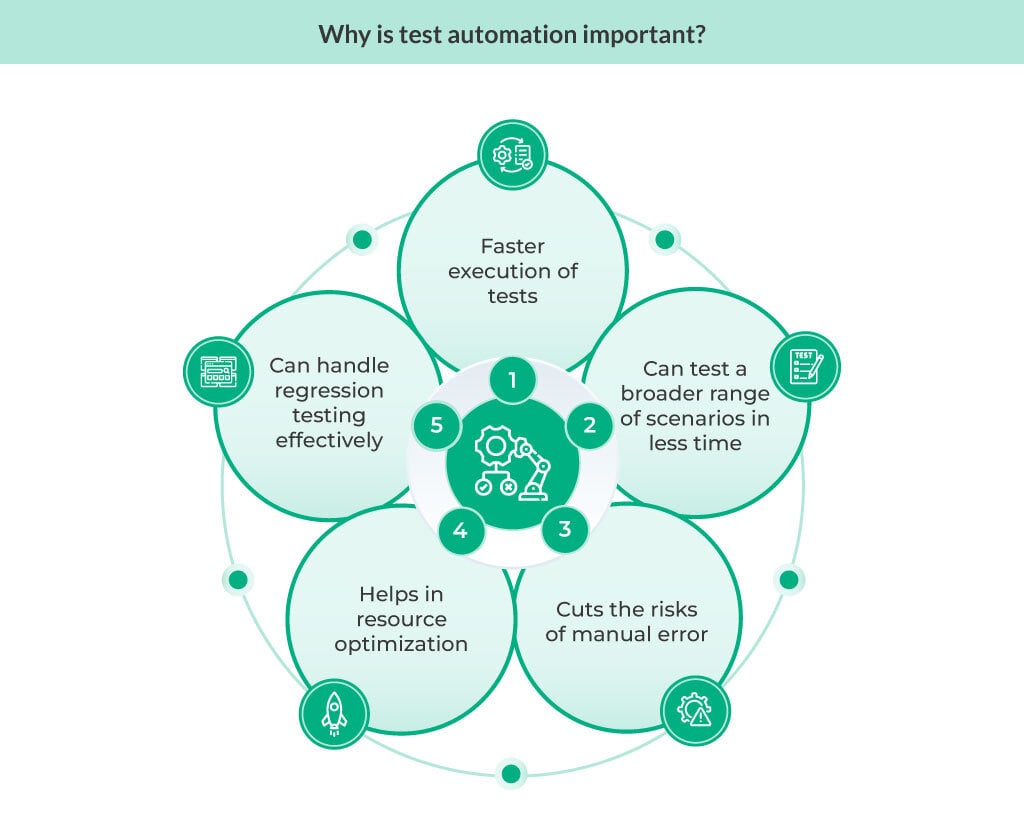

Image Source: Testbytes

Three AI capabilities are changing how QA teams work. These technologies enhance your test processes and improve software quality in ways that weren’t possible before.

Machine Learning for Pattern Recognition in Test Failures

Machine learning handles vast amounts of test data that you simply can’t process manually. This capability changes how your team understands and responds to test failures.

ML algorithms study historical test results to find common failure patterns. Your team can streamline detection and triage of the most persistent errors. Instead of treating each failure as a separate incident, ML shows you the connections between seemingly unrelated issues. Proprietary machine learning algorithms review test pass/fail data to uncover patterns that impact your overall test suite performance [21].

Here’s what this means for your daily work:

- Predicting which application parts will likely fail based on code changes

- Ranking patterns by their system impact

- Finding root causes faster by connecting related failures

ML models predict defect likelihood in various software parts by analyzing past commit data and test results [5]. Your testers can focus efforts strategically and catch potential bugs early in the development cycle. Testing resources get allocated more efficiently to high-risk areas.

Natural Language Processing for Test Case Generation

NLP bridges the gap between human language and executable test scripts. This makes test automation accessible to team members without coding expertise. The technology interprets natural language inputs like user stories and requirements documents to generate test cases [5].

The process works like this:

- Analyzing requirements documents through NLP techniques

- Extracting relevant information about testing requirements

- Converting information into structured test cases

- Translating human-readable instructions into executable tests

One study identified seven distinct NLP techniques and seven algorithms specifically for test case generation [5]. These approaches let testers write test scripts in plain English rather than complex programming languages. Instead of writing code, a tester enters commands like “enter ‘John’ into ‘First Name'” and “click ‘Register'” [5].

NLP-powered tools evaluate test results, summarize them, and generate reports without manual input [3]. This streamlines your testing process significantly.

Computer Vision for UI Regression Testing

Visual regression testing ensures code changes don’t hurt your application’s user interface. Traditional UI testing approaches have limitations that AI-powered computer vision solves effectively.

Visual AI combines machine learning and computer vision to identify visual defects in web pages. Rather than inspecting individual pixels, it recognizes elements with properties like dimensions, color, content, and placement—similar to how the human eye works [6]. This approach overcomes the problems of traditional pixel-based comparison methods.

Computer vision capabilities include:

- Detecting misaligned buttons, overlapping images or texts, and partially visible elements

- Validating UI consistency across different browsers and devices

- Creating consolidated reports with clearly marked visual differences

Visual AI mimics the human eye and brain but doesn’t get tired or bored [6]. This makes it ideal for thorough UI testing. Modern websites often contain hundreds of pages with millions of elements, making manual visual testing impractical.

These three core AI capabilities form the foundation of next-generation testing approaches. They deliver more accurate, efficient, and better quality assurance than traditional methods.

Top Use Cases of AI in Software Testing

AI creates practical solutions for testing challenges your team deals with every day. I’ve seen these applications deliver real value for QA professionals who want to work smarter, not harder.

Automated Test Case and Script Generation

Creating test cases traditionally eats up massive amounts of time. AI changes this by studying your requirements documents, code, and user stories to generate test cases automatically. The technology uses natural language processing to pull key information from business requirements and spot potential test scenarios [7].

The numbers tell the story. A recent whitepaper found that a well-equipped mid-sized vehicle needs management of 450,000 software and electronics requirements [7]. AI solutions can cut test case creation time by up to 80% while keeping quality high [7].

But here’s what you need to know: many current tools create manual tests, not fully automated, executable test cases [8]. Your human testers still play a crucial role in checking that AI-generated test suites are complete and accurate, especially for edge cases or complex scenarios.

Synthetic Test Data Creation for Edge Cases

Testing with real production data creates privacy headaches and limits what scenarios you can test. AI solves this by generating synthetic data that acts like real-world data without the risks.

Synthetic data generation builds artificial data that copies the statistical properties of real information [9]. This gives you serious advantages:

You can expand training datasets when real-world data costs too much or is hard to get. You can test and validate different scenarios without privacy worries. You can simulate rare but important edge cases [10].

Healthcare shows how valuable this gets. Tools like Synthea create synthetic patients for healthcare analytics, letting medical researchers work without privacy or ethical concerns [11]. Industries with strict data protection rules find this particularly useful.

Visual Testing for Cross-Browser Consistency

Your application’s UI needs to work consistently across different browsers and devices. Visual regression testing handles this, but traditional approaches miss subtle visual defects. AI-powered visual testing gives you better coverage.

The process captures baseline images of your UI and compares them to images taken after code changes [12]. When the system finds differences, it flags them and creates a report. Your development team can quickly spot unwanted changes.

Visual AI identifies elements by their properties like dimensions, color, content, and placement—similar to how your eye works [12]. This beats traditional pixel-by-pixel comparison. Modern websites with hundreds of pages and millions of elements make this approach especially valuable.

Predictive Defect Detection and Root Cause Analysis

AI excels at finding patterns in large datasets to predict where problems might happen. It examines historical test outcomes to forecast potential defects before they reach production [13].

This predictive capability lets your team focus testing efforts on high-risk areas. Resource allocation becomes more efficient. Research shows AI-powered root cause analysis can reduce issue detection time by up to 90% and improve resource allocation efficiency from 60% to 85% [14].

AI doesn’t just find defects—it pinpoints their origins by connecting test failures to specific code changes [13]. Tools like Railtown.ai analyze logs, code changes, and patterns to resolve issues quickly and precisely. The debugging process becomes significantly more efficient.

AI Tools That QA Teams Can Start Using Today

Image Source: aqua cloud

Several proven tools are already helping QA teams create smarter, more efficient test processes. Let me walk you through four standout solutions you can start using immediately.

Appvance for Behavior-Based Test Generation

Appvance’s AIQ platform stands out with its patented AI script generation (AISG) capability that actively learns your application through deep exploration. The system mimics real users—clicking, typing, and navigating through your interface—then automatically generates hundreds or thousands of valid test cases based on these behaviors [15].

What makes Appvance particularly valuable is its “vibe testing” approach. QA engineers can generate test scripts simply by describing what they want tested in plain English [16]. This cuts down the time needed to create comprehensive test suites while achieving approximately 10 times the user flow coverage compared to manually created tests [16].

TestCraft for Continuous Testing

TestCraft takes an open-source approach to AI testing, focusing on practical utilities that integrate with your existing workflows [17]. Their free browser extension helps QA teams select any element and instantly receive test ideas, automation code, and WCAG accessibility checks [17].

The tool exports seamlessly to popular frameworks like Playwright, Cypress, and Selenium. This makes it ideal for teams looking to enhance their continuous testing pipelines without abandoning their current tools [17]. TestCraft’s API Automation Agent can process an OpenAPI specification and quickly generate a runnable test automation framework [17].

Functionize for Cloud-Based AI Testing

Functionize delivers cloud-based testing infrastructure with a team of specialized AI agents. Generate, Diagnose, Maintain, Document, and Execute form what they call “Digital Workers” for quality assurance [18]. The results speak for themselves—GE Healthcare reduced testing time from 40 hours to just 4 hours, achieving 90% labor savings [18].

The platform handles complex testing scenarios across multiple browsers, mobile devices, and cloud environments without requiring teams to build and maintain complex infrastructure [19]. Its intelligent scheduling capabilities can automate over 1,000 workflows within minutes [19].

Applitools for Visual AI Regression

Applitools Eyes uses Visual AI technology to detect visual and functional regressions in your applications with remarkable accuracy [20]. Instead of simple pixel-by-pixel comparisons, Applitools intelligently recognizes elements with properties like dimensions, color, content, and placement—similar to how the human eye works [21].

A key advantage is its ability to recognize dynamic content like ads and personalized dashboards while ignoring expected variations such as dates and transaction IDs [5]. The tool integrates with over 50 test frameworks including Cypress and Selenium, requiring just a single snippet of code to capture and analyze an entire screen [5].

Benefits of AI in Software Test Automation

“Automation is an accelerator, not a replacement. It’s about putting brains in your muscles.”

Chris Launey, Principal Engineer at Starbucks

The business impact of AI in software testing goes beyond what most people expect. When you implement AI-driven test automation properly, it delivers measurable advantages that hit your bottom line directly.

Faster Test Execution and Feedback Loops

AI speeds up testing cycles by optimizing how tests run through intelligent prioritization and parallelization. Instead of running all tests one after another, AI looks at risk factors and historical data to decide which tests should run first. Development teams get critical feedback much faster – root-cause analysis tasks that used to take days now get resolved in minutes [22]. Organizations using AI-driven testing report productivity increases of up to 21% [23]. This means quicker release cycles and faster time to market.

Improved Test Coverage with Less Manual Effort

Traditional testing methods struggle with comprehensive coverage because of time constraints. AI can increase test coverage by up to 85% [24] while cutting down the manual effort your team needs to put in. AI analyzes application behavior, finds testing gaps, and automatically generates test cases for scenarios you might otherwise miss. One electronics manufacturer reduced missed defects by 30% after improving their data preparation [23].

Reduced Maintenance Through Self-Healing Scripts

Test script maintenance eats up substantial QA resources. AI tackles this challenge through self-healing capabilities that automatically update scripts when application changes happen. This eliminates frequent manual test updates, lets QA teams focus on higher-value tasks, and significantly decreases automation test failure rates [25]. You get lower maintenance costs and more stable CI/CD pipelines. This leads to faster releases and higher return on investment.

Enhanced Accuracy in Defect Detection

AI systems excel at pattern recognition. They identify subtle defects that manual testing might miss. These systems can reduce false positives by up to 86% while maintaining high detection accuracy [23]. AI-powered visual testing tools detect UI inconsistencies across browsers and devices with remarkable precision. Defects get caught before reaching production.

Tackling Common Obstacles in AI-Driven QA

Image Source: QA Touch

AI testing capabilities are impressive, but several obstacles can stop successful implementation cold. I’ve seen teams struggle with these challenges, and understanding them upfront helps you navigate the path to effective AI-driven QA.

Getting High-Quality Training Data Right

Here’s the reality: AI models need extensive, high-quality data to work effectively. Poor quality data in test management leads to inaccurate predictions and automation that doesn’t deliver [2]. This is where many teams hit their first major roadblock.

You need to tackle this systematically:

- Implement data preprocessing pipelines to standardize datasets

- Use automated validation tools to identify inconsistencies

- Engage QA professionals to review and annotate datasets [1]

AI-based testing systems typically struggle with inconsistent data formats from multiple sources. This makes it difficult for AI models to process information effectively [26]. It’s a common problem, but one you can solve with the right approach.

Closing the AI Skills Gap for Your Testing Team

The numbers tell a challenging story. Research from Reuters shows an expected AI talent gap of 50% in 2024 [27]. This shortage directly affects AI adoption in software testing across organizations. Many employees believe the AI skill gap is primarily a training gap, with companies falling behind in upskilling employees on AI usage [27].

Your organization should focus on three key areas:

- Assess the AI readiness of current employees

- Develop interactive, customizable learning programs

- Partner with educational institutions for specialized training

The skill gap is real, but it’s not insurmountable if you invest in your people.

Making AI Decisions Transparent

AI systems often operate as “black boxes,” making their decision processes difficult to understand. This lack of transparency raises concerns about whether requirements can be met consistently, especially in safety-critical applications [28].

Transparency helps people access information to better understand how an AI system was created and how it makes decisions [29]. Without this visibility, stakeholders may distrust AI-generated test results. You can’t afford that kind of skepticism from your team or clients.

Finding the Right Balance Between Human Oversight and AI Autonomy

Even though AI offers significant efficiency gains in software test automation, finding the right balance between human oversight and AI autonomy remains crucial. Too much emphasis on human oversight can reduce AI system effectiveness, leading to alert fatigue rather than letting AI take automatic responsive measures [30].

Humans bring ethical decision-making, accountability, adaptability, and continuous improvement capabilities that complement AI’s analytical strengths [31]. The combination of human intelligence and AI creates a powerful approach to quality assurance. The key is knowing when to step in and when to let the AI do its work.

Conclusion

AI-powered software testing changes how quality assurance works. You’ve seen how machine learning, natural language processing, and computer vision make testing smarter and more efficient. These technologies solve QA challenges that teams have struggled with for years.

The use cases we covered show AI’s versatility across your testing lifecycle. Test generation, synthetic data creation, visual testing, and predictive defect detection give your team capabilities that weren’t possible before. Tools like Appvance, TestCraft, Functionize, and Applitools make these techniques accessible without requiring deep AI expertise.

I’ve seen teams get faster feedback loops, better test coverage, reduced maintenance work, and more accurate defect detection when they adopt AI properly. These improvements translate directly into business value through quicker releases and higher-quality software.

But successful implementation requires honest acknowledgment of the challenges. High-quality training data remains essential. Your team needs targeted training programs to bridge skill gaps. You need transparency in AI decisions and the right balance between human oversight and AI autonomy.

The future belongs to teams that combine human expertise with AI capabilities effectively. AI doesn’t replace human testers – it amplifies what they can do and frees them to focus on creative, high-value work. Your QA processes will become more intelligent and adaptive as these technologies evolve.

Think of AI implementation as a journey, not a destination. Start small with focused applications. Measure your results. Gradually expand your AI testing footprint. This approach ensures you get maximum value while minimizing disruption to your existing workflows.

If you’re ready to make your testing smarter, pick one AI tool from this guide and try it with your team. The technology is here. The benefits are real. Your competition is probably already exploring these capabilities.

FAQs

Q1. How does AI improve software testing efficiency? AI enhances software testing efficiency by automating test case generation, executing tests faster, and providing quicker feedback loops. It can analyze vast amounts of data to identify patterns and predict potential defects, allowing QA teams to focus on high-risk areas and reduce manual effort.

Q2. What are some popular AI-powered testing tools available today? Some popular AI-powered testing tools include Appvance for behavior-based test generation, TestCraft for continuous testing, Functionize for cloud-based AI testing, and Applitools for visual AI regression testing. These tools offer various capabilities to enhance test automation and improve overall software quality.

Q3. Can AI completely replace human testers in QA processes? No, AI cannot completely replace human testers. While AI excels at automating repetitive tasks and analyzing large datasets, human testers bring critical thinking, creativity, and contextual understanding to the testing process. The ideal approach combines AI capabilities with human expertise for optimal results.

Q4. What challenges might teams face when implementing AI in software testing? Common challenges in implementing AI for software testing include ensuring high-quality training data, bridging the AI skill gap among team members, maintaining transparency in AI decision-making processes, and finding the right balance between human oversight and AI autonomy.

Q5. How does AI contribute to visual regression testing? AI enhances visual regression testing through computer vision technology. It can detect subtle visual defects across different browsers and devices, identify misaligned elements, and validate UI consistency more effectively than traditional pixel-by-pixel comparisons. This approach is particularly valuable for complex, modern web applications with numerous pages and elements.

References

[1] – https://docs.saucelabs.com/insights/failure-analysis/

[2] – https://testrigor.com/blog/machine-learning-to-predict-test-failures/

[3] – https://www.lambdatest.com/learning-hub/nlp-testing

[4] – https://www.researchgate.net/publication/377693549_Software_Test_Case_Generation_Using_Natural_Language_Processing_NLP_A_Systematic_Literature_Review

[5] – https://testrigor.com/ai-in-software-testing/

[6] – https://www.ericsson.com/en/blog/2022/12/visual-regression-testing-ai

[7] – https://aws.amazon.com/blogs/industries/using-generative-ai-to-create-test-cases-for-software-requirements/

[8] – https://www.tricentis.com/blog/10-ai-use-cases-in-test-automation

[9] – https://learn.microsoft.com/en-us/azure/ai-foundry/concepts/concept-synthetic-data

[10] – https://www.tonic.ai/guides/guide-to-synthetic-test-data-generation

[11] – https://www.forbes.com/sites/bernardmarr/2024/08/29/20-generative-ai-tools-for-creating-synthetic-data/

[12] – https://applitools.com/blog/top-10-visual-testing-tools/

[13] – https://www.testingtools.ai/blog/ai-root-cause-analysis-for-test-failures-key-benefits/

[14] – https://www.botable.ai/blog/ai-root-cause-analysis

[15] – https://docs.appvance.net/Content/AIQ/AI/Test_Generation.htm

[16] – https://appvance.ai/blog/vibe-testing-the-future-of-effortless-qa-automation

[17] – https://home.testcraft.app/

[18] – https://www.functionize.com/

[19] – https://www.functionize.com/automation-cloud

[20] – https://applitools.com/solutions/regression-testing/

[21] – https://applitools.com/blog/visual-regression-testing/

[22] – https://applitools.com/platform/eyes/

[23] – https://www.functionize.com/blog/the-role-of-ai-in-scaling-test-automation

[24] – https://www.testingtools.ai/blog/how-ai-improves-defect-tracking-accuracy/

[25] – https://www.lambdatest.com/blog/ai-driven-test-execution-strategy-optimization/

[26] – https://www.accelq.com/blog/self-healing-test-automation/

[27] – https://www.qmetry.com/blog/challenges-and-opportunities-of-implementing-ai-in-test-management

[28] – https://testrigor.com/blog/top-challenges-in-ai-driven-quality-assurance/

[29] – https://www.functionize.com/blog/the-importance-of-data-quality-in-ai-based-testing

[30] – https://www.ibm.com/think/insights/ai-skills-gap

[31] – https://www.qualitymag.com/articles/98248-balancing-innovation-and-safety-the-complex-role-of-ai-in-quality-assurance

[32] – https://www.ibm.com/think/topics/ai-transparency

[33] – https://www.computerweekly.com/opinion/Security-Think-Tank-Balancing-human-oversight-with-AI-autonomy

[34] – https://www.cornerstoneondemand.com/resources/article/the-crucial-role-of-humans-in-ai-oversight/